on

Asynchronous programming in .NET

An abstract representation of concurrency, as hallucinated by OpenAI

An abstract representation of concurrency, as hallucinated by OpenAI

Table of contents

- Introduction

- Mind refresher

- .NET ThreadPool class

- Writing asynchronous code

Introduction

At work, I recently had the opportunity to contribute to our internal AMQP pub-sub reference library for .NET Core applications. Specifically, I upgraded the library's main dependency and opted-in for some concurrency optimizations at the AMQP consumers level.

Although the update itself was relatively straightforward, I realized that I wasn't comfortable enough with the topic of concurrency in the .NET. Therefore, I spent some time reading various documentation and articles related to the subject.

While I was reading, I wrote a private note to organize and solidify the terms and concepts, that I'm now turning into this blog post.

I'm sharing this as a small gift to my future self and as a helpful reference for anyone who may need to investigate the same problem space.

Mind refresher

Before diving into .NET specific stuff - although already familiar with the basic terminology - I invested a few minutes to refresh some basic terminology and concepts.

Processes

A process represents an application or program. As simple as that.

Threads

A thread is a fundamental unit to which the OS allocates processor time inside a process.

Most of the times, when talking about threads we talk about Operating system or Platform threads. To not be confused with abstractions provided by some languages like Java's virtual threads

OS threads are limited in number and parallel execution by the underlying host/hardware:

- The max number of threads that a process can spawn is usually in the tens of thousands (On my Mac

kern.num_threads: 20480). To avoid resources starvation, applications usually introduce their limits with a thread pool. - The max number of threads that can truly run in parallel is way lower and determined - with some approximation - by the number of CPU cores (8 on my laptop)

Threads usually have a state associated (a Context to use the .NET terminology), that is extra baggage that might be required to resume a task at a later stage. This is not always explicitly mentioned in docs or included in code blocks, but it's something to be aware of as we'll see later. In .NET

Concurrency

Concurrency means executing multiple tasks at the same time, but not necessarily simultaneously. This definition implies that concurrent tasks are tasks that can be interrupted and awaited if needed.

When thinking about concurrency I figure myself preparing a risotto:

- I put some water to boil and forget about it

- While I wait for the water to heat, I clean and cut leek, onions, carrots, parsley, sometimes a potato, etc. Once done I put them in the pot, and forget about it for 1 hour approximately

- While I wait for the broth to be ready... I measure the rice to use, and then I start preparing a bit of onions for the sauce

- And so on...

As you can see a lot of tasks, that I'm taking care of concurrently, but not in parallel! I've only 2 hands in the end.

So, in a nutshell, concurrency is more about optimizing time and resources than going faster. The requirement for concurrency to happen is having tasks where you have to wait for some condition to happen (the water to boil for instance, or an HTTP response to be returned by an API). A performance improvement is usually a natural consequence of it.

Parallelism

By contrast parallelism means doing executing multiple tasks simultaneously. To reuse the same image we used before, we can imagine all the steps to happen at the same time.

A drawing of a young boy cutting vegetables in parallel, as hallucinated by OpenAI

A drawing of a young boy cutting vegetables in parallel, as hallucinated by OpenAI

.NET ThreadPool class

Let's now start diving in .NET specific contents.

As mentioned in one of the refresher's pins, applications are usually limiting their degree of parallelism with the help of a thread pool.

In .NET, this concept is implemented with the ThreadPool class, a utility that's meant to ease threads management and to mitigate the risk of accidental resource starvation.

Threads in .NET can be either foreground and background threads. Foreground threads have the ability to prevent the current application from terminating.

All threads held by the ThreadPool class are background threads and there is only one ThreadPool instance per process/application.

Worker and CompletionPort threads

The ThreadPool keeps 2 different pools of threads internally: one for the worker threads and one for completionPort threads. While there’s technically no difference between worker and completion threads, they’re used for different purposes.

Worker threads refer to any thread other than the main thread of your application and can be used to do any kind of work, including waiting for some I/O to complete.

CompletionPort or I/O threads are threads that the Threadpool reserves to dispatch callbacks from the IO completion port. But…

What is an IO Completion port?

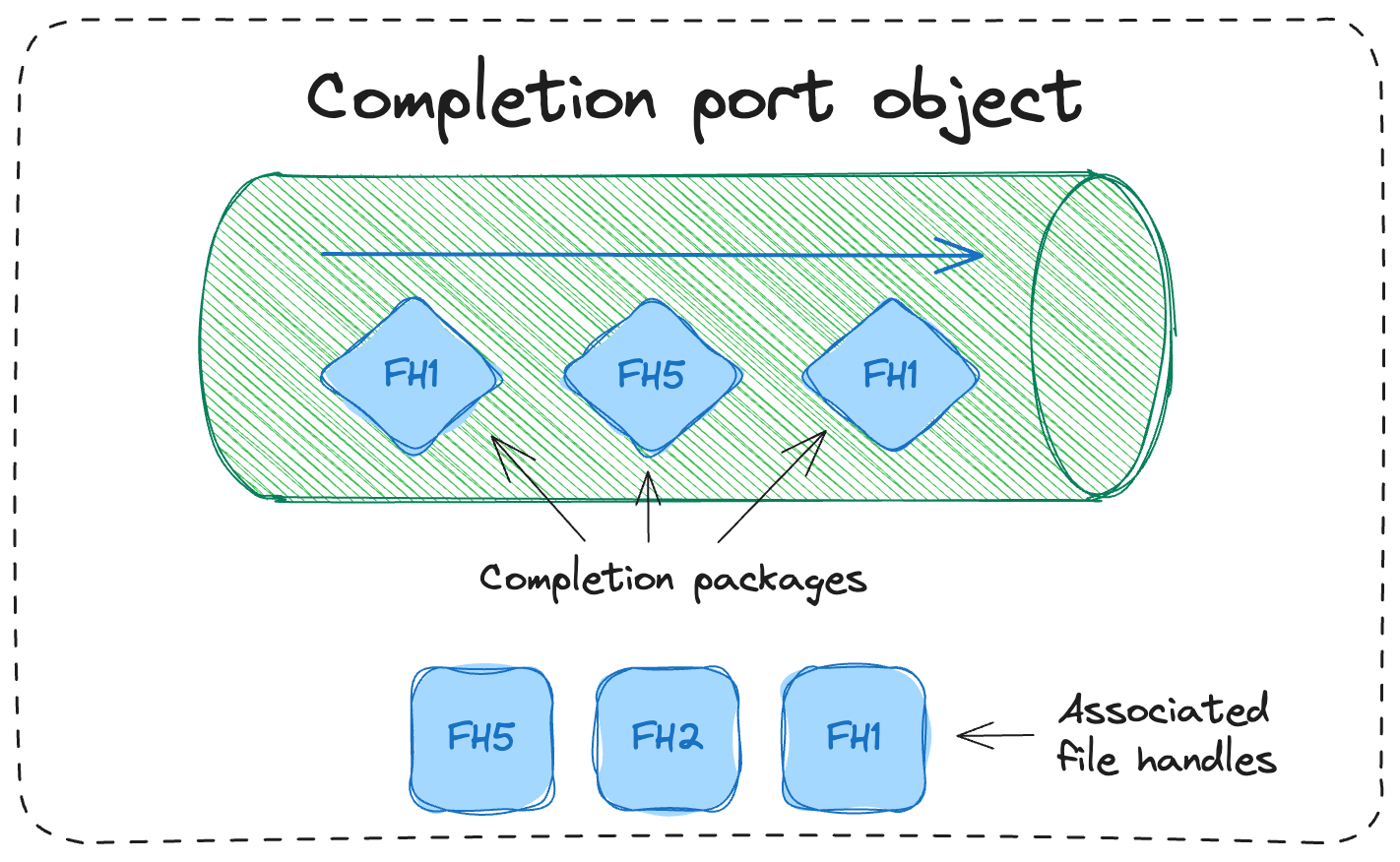

An I/O Completion Port (IOCP) is a queue-like operating system object that can be used to simultaneously manage multiple I/O operations, natively available on Windows NT operating system.

An important component of IOCPs are file handles: handles to any asynchronous-able I/O endpoint - a file, a stream, a network socket…

Whenever an operation on any of the file handles completes, and I/O completion packet is queued into the IOCP. This is where IOCP Threads come into play: they are in charge of completing the operation that was parked on the completion port.

Completion port representation

Completion port representation

Why not simply offloading work to a worker thread?

An alternative to using IOCP threads would be to just use worker threads, but doing so we would block one of our workers just because we’re waiting for an event (on the file handle).

The ThreadPool class automatically monitor and manage IOCP threads

What about UNIX?

As mentioned above, IOCP is a Windows-specific component, and as such not available as-is on UNIX systems. The Common Language Runtime (CLR) takes care of creating an abstraction of IOCP in UNIX systems as well. Delving into the host-specific implementation of IOCPs seemed out of scope for my objectives.

Default configuration

The thread pool provides new worker threads or I/O completion threads on demand until it reaches the minimum for each category. By default, the minimum number of threads is set to the number of processors on a system (Environment.ProcessorCount property)

The maximum value configured by default might depend on multiple factors, but In general, it is insanely high and a program should never get close.

These are the results I've found while running a test I did on my 8-Core M1 MacBook Pro:

Environment.ProcessorCount: 8

MinWorkerThreads: 8

MinCompletionPortThreads: 1

MaxWorkerThreads: 32767

MaxCompletionPortThreads: 1000

Custom values

The ThreadPool class comes with 2 static utility that helps updating the minimum and maximum values for worker and IOCP threads, namely SetMinThreads and SetMaxThreads.

Minimum values are too high: resource starvation

Higher number of minimum threads will increase the amount of context-switching needed when jumping from thread to thread. CPU and Memory usage will be affected.

Minimum values are too low: throttling delays

This note clarifies that the minimum number of worker/IOCP threads should line up with the expected burst of requests that your application can handle. Otherwise, throttling kicks-in adding a delay of ~500ms for each newly created thread.

This can lead to significant issues when your application receives a burst of requests greater than your minimum values.

How to check your app configuration

To investigate your app’s ThreadPool configuration one can leverage the GetMinThreads and GetMaxThreads ThreadPool’s static methods.

Also Environment.ProcessorCount property can be checked for additional context.

When does ThreadPool come into play

Quoting the .NET docs, the ThreadPool class is used in many different places:

.NET uses thread pool threads for many purposes, including Task Parallel Library (TPL) operations, asynchronous I/O completion, timer callbacks, registered wait operations, asynchronous method calls using delegates, and System.Net socket connections.

The easiest way to leverage it is to use the Task Parallel Library (TPL).

Basically, whenever your using a Task or awaiting something async in your code you're already taking advantages of it. Coming from a Java background, I have to admit that this sounded a bit unexpected but reassuring, when I read it for the first time! It turns out that when doing asynchronous programming .NET, developers can be sort-of safe without necessarily encountering headaches due to thread management problems.

Writing asynchronous code

There are usually 2 macro scenarios where you want to write asynchronous code. Again, I can't help but quoting the very well written official docs:

The core of async programming is the

TaskandTask<T>objects, which model asynchronous operations. They are supported by theasyncandawaitkeywords. The model is fairly simple in most cases:For I/O-bound code, you await an operation that returns a

TaskorTask<T>inside of an async method.For CPU-bound code, you await an operation that is started on a background thread with the

Task.Runmethod.

Let's see a couple of examples to clarify further.

IO-bound scenario

An IO-bound scenario manifests whenever your awaiting on I/O: a file, a network call... Let's say your code integrates an external REST API to fetch weather forecast information.

A naive, blocking implementation of an hypothetical GetWeatherForecast function would be something like this:

public Forecast GetWeatherForecast(string city){

var request = new HttpRequestMessage(

HttpMethod.Get, $"https://weather-forecast.com/api/city={city}");

var response = _httpClient.Send(request);

var responseBody = new StreamReader(response.Content.ReadAsStream()).ReadToEnd();

// deserialise the response body into a Forecast instance and return it

return responseBody.ToForecast();

}

This code would completely block the execution of the current thread until a response from the weather-forecast.com API is returned, and new requests would queue up: not very efficient.

What we could do instead is leverage the asynchronous GetAsync method offered available on the HttpClient type, and free up the current thread's execution,

while awaiting an HTTP response from the external API. To do so, we have to slightly amend our code:

public async static Task<Forecast> GetWeatherForecastAsync(string city){

var response = await _httpClient

.GetAsync($"https://weather-forecast.com/api/city={city}");

var responseBody = await response.Content.ReadAsStringAsync();

// deserialise the response body into a Forecast instance and return it

return responseBody.ToForecast();

}

Notice how we replaced the blocking codes with a few async and await keywords. First of all we await on the network call to be completed.

Then, we also await when reading the raw response content to a string. Last but not least, to be able to use the await keyword we need

to mark our signature with the async keyword and wrap the Forecast type into a Task wrapper. It's also a good practice to add the Async

suffix to our original GetWeatherForecast method.

In this last case, our code leverage native async methods offered by the .NET runtime via the HttpClient and HttpContent types.

The await keyword included in our new code hides some sort of magic. When that is declared, the execution of the awaited task (the HTTP call or the serialization bits in the above case) are offloaded to IO Threads and the current thread is freed up and can serve other requests.

Hopefully this explains why I said earlier that leveraging async code is more about optimizing resources usage, and only secondarily making your code go faster.

CPU-bound scenario

The classic example of a CPU-bound scenario is when you have to run some expensive computation. In this case, you want to keep your service/app responsive, while running the numbers.

Unlike the IO-bound, here there's not much to wait on, most of the work needs to happen locally on your host and cores, so what you should do is offloading the expensive task to a worker thread.

public async static Task<Result> ComputeResult(){

var result = await Task.Run(() => {

// an expensive, time consuming calculation...

});

return result

}

The content of the function passed to the Task.Run method gets offloaded to one of the ThreadPool's worker thread, while the current thread is freed up and can accept and process other requests without undesired side effects on the experienced responsiveness/latency.

Avoid calling anything Async

Notice how in the las ComputeResult method I did not add an Async suffix. What it initially could look like an omission, is indeed a deliberate choice. As brilliantly explained on this post, one should mark as Async whatever is supposed to leverage IO threads! As I hope I've clearly explained at this point, concurrency != parallelism.

An individual interested in concurrency improvements is primarily concerned with efficiency rather than execution time: the focus is on making sure that the application can scale and handle as much load as it can.

Wrapping a call to Task.Run(...) inside a method ending with the Async is probably causing the exact opposite of the what a consumer interested in concurrency looks like.

A consumer invoking the following code would simply consume another (worker) thread, rather than reusing the existing resources:

public async static Task<Result> ComputeResultAsync(){

var result = await Task.Run(() => {

// an expensive, time consuming calculation...

});

return result

}

Writing your own async method

Most of the times our async methods (like the previous GetWeatherForecastAsync) leverage APIs that are natively async.

One looking to implement their own asynchronous method should take advantages of the TaskCompletionSource<TResult> class.

How to use the TaskCompletionSource<TResult> class goes beyond the objective of this post.

The HttpContent's ReadAsStringAsync() implementation offers a juicy example though.

ConfigureAwait what?

In .NET each thread has an associated state, called Context. In a .NET application hosting a REST API, a context is for instance the HTTP request associated to an API call.

How this context behave can be controlled via the Task class. By default, when a task is awaited, its state is captured by the OS, and when a task resumes its original context is re-attached.

Resuming a thread with its original state might be useful for UI updates, but it's often (at least in my experience) not required. Ase these lookup and re-attach operations have consequences the performances of our thread management solution, the general advice is to capture the Thread's context only when required.

To opt-out from the default thread's context behavior you can define use the ConfigureAwait(false) method. With reference to one of the code snippets above:

var forecast = await GetWeatherForecastAsync("Milan").ConfigureAwait(false);